Dark Web Marketplace Reveals Over 101,000 Stolen ChatGPT Accounts

According to data from a dark web marketplace, more than 101,000 user accounts of ChatGPT, have been stolen by information-stealing malware in the past year.

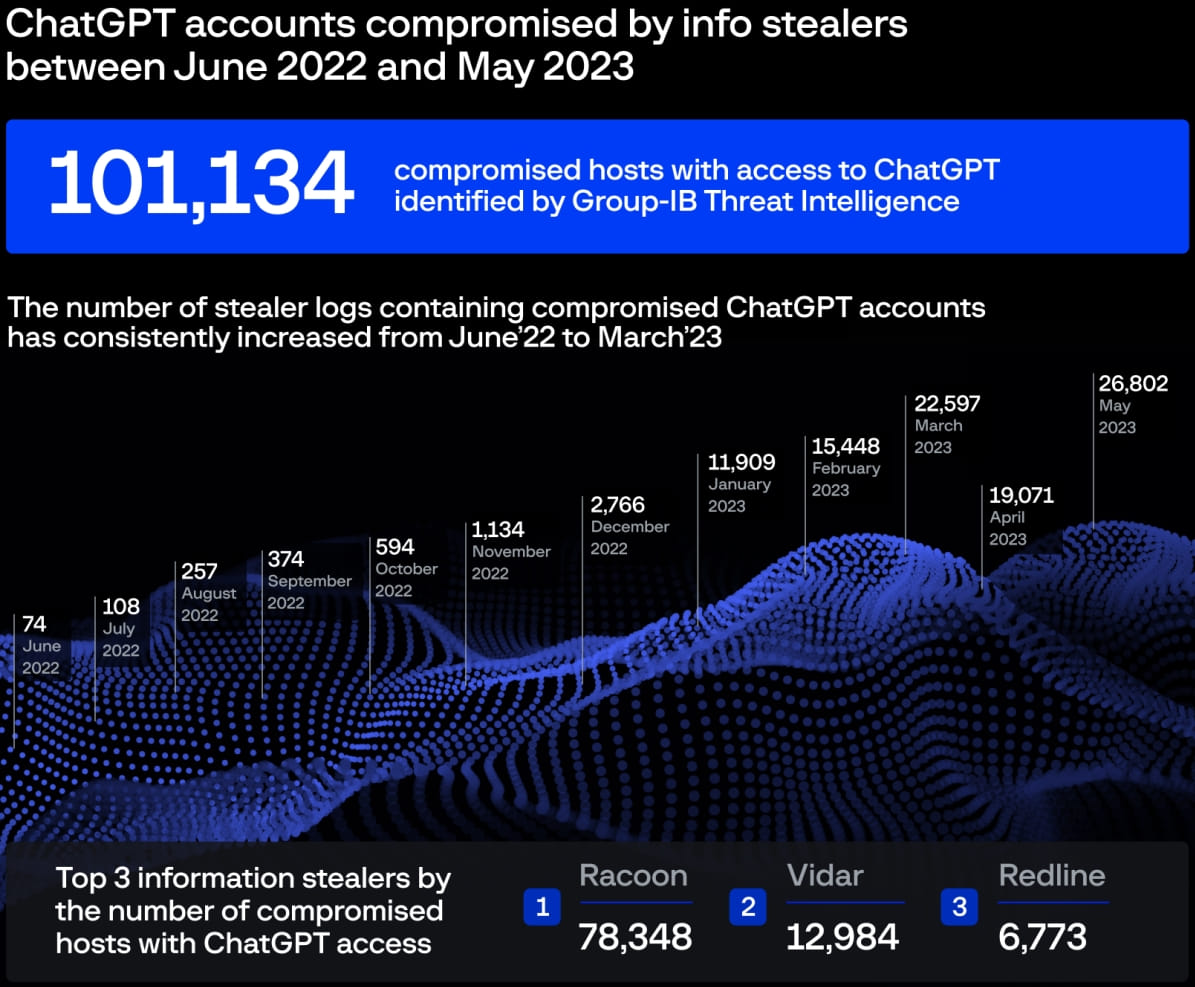

The cyberintelligence firm Group-IB discovered over a hundred thousand logs containing compromised ChatGPT accounts on various underground websites, with a significant surge occurring in May 2023 when threat actors posted 26,800 new ChatGPT credential pairs.

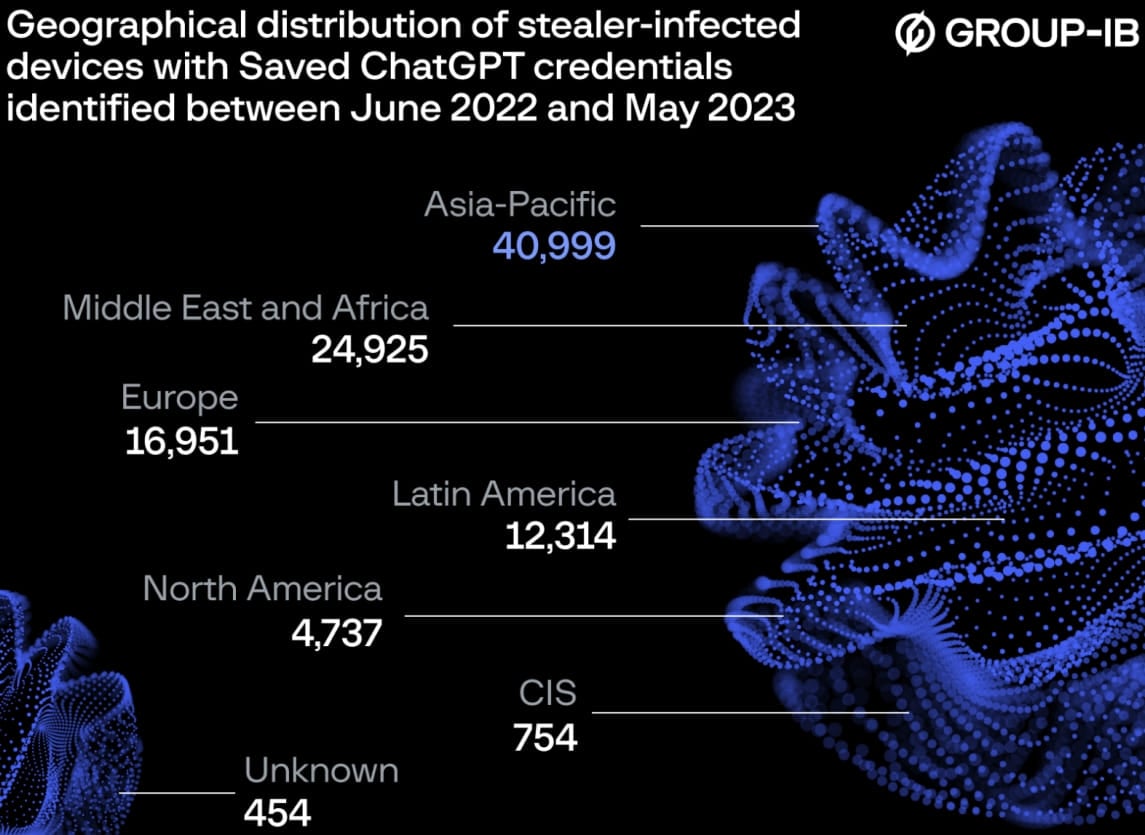

The Asia-Pacific region was the most targeted, with nearly 41,000 compromised accounts between June 2022 and May 2023, followed by Europe with almost 17,000. Surprisingly, North America ranked fifth, with 4,700 compromised accounts.

Victims distribution (Group-IB)

Victims distribution (Group-IB)

Information stealers are a type of malware that specifically targets account data stored on various applications, including email clients, web browsers, instant messengers, gaming services, and cryptocurrency wallets. They extract credentials saved in web browsers’ databases and exploit encryption reversal techniques to gain access to stored secrets.

The stolen credentials, along with other pilfered data, are compiled into archives known as logs and sent back to the attackers’ servers for retrieval.

The compromised ChatGPT accounts, alongside email accounts, credit card information, and cryptocurrency wallet data, highlight the increasing significance of AI-powered tools for both users and businesses. ChatGPT’s ability to store conversations poses a unique risk, as unauthorized access to an account can potentially expose proprietary information, internal business strategies, personal communications, and even software code.

See Also: So you want to be a hacker?

Offensive Security, Bug Bounty Courses

Enterprises integrating ChatGPT into their workflows are particularly vulnerable, as employees may unwittingly provide threat actors with a wealth of sensitive intelligence if their account credentials are obtained.

In response to these concerns, major tech companies like Samsung have implemented strict policies banning the use of ChatGPT on work computers, even threatening termination for non-compliance.

Group-IB’s data reveals a steady increase in stolen ChatGPT logs over time, with the majority (80%) attributed to the Raccoon stealer, followed by Vidar (13%) and Redline (7%).

Compromised ChatGPT accounts (Group-IB)

Compromised ChatGPT accounts (Group-IB)

Trending: Offensive Security Tool: PhoneSploit Pro

If you input sensitive data into ChatGPT, it is advisable to disable the chat saving feature from the platform’s settings menu or manually delete conversations as soon as you are finished using the tool. However, it is important to note that many information stealers also capture screenshots or perform keylogging, so even if conversations are not saved, a malware infection could still lead to a data breach.

Unfortunately, ChatGPT has already experienced a data breach in which users had access to other users’ personal information and chat queries. Therefore, those dealing with highly sensitive information should exercise caution and consider relying on locally-built and self-hosted tools for enhanced security rather than cloud-based services.

Are u a security researcher? Or a company that writes articles or write ups about Cyber Security, Offensive Security (related to information security in general) that match with our specific audience and is worth sharing?

If you want to express your idea in an article contact us here for a quote: [email protected]

Source: bleepingcomputer.com