Sleepy Pickle: New Attack Targets Machine Learning Models

See Also: So, you want to be a hacker?

Offensive Security, Bug Bounty Courses

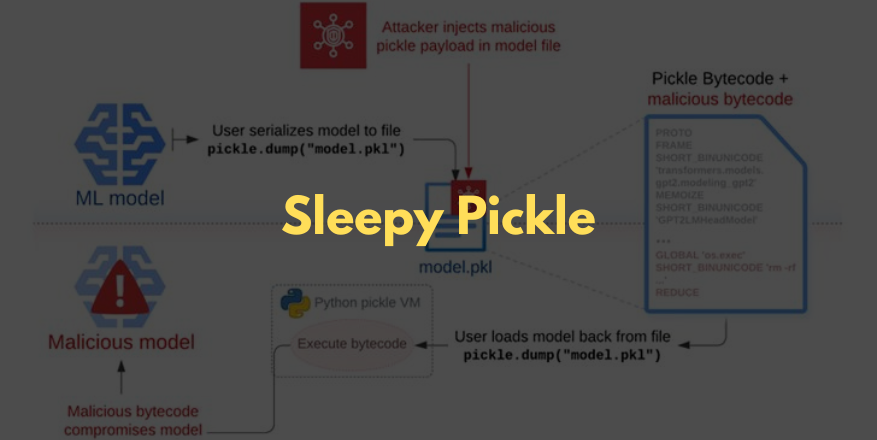

Sleepy Pickle technique

The Sleepy Pickle technique involves embedding a malicious payload into a pickle file using open-source tools like Fickling. The payload can then be delivered to a target through various methods, including adversary-in-the-middle (AitM) attacks, phishing, supply chain compromises, or exploiting system weaknesses.

“When the file is deserialized on the victim’s system, the payload executes and modifies the model in-place, potentially inserting backdoors, controlling outputs, or tampering with processed data before returning it to the user,” Milanov stated.

In practical terms, the injected payload can alter the model’s behavior by tampering with model weights or modifying the input and output data. This could lead to generating harmful outputs or misinformation, such as false medical advice, stealing user data under specific conditions, or indirectly attacking users by manipulating summaries of news articles with links to phishing pages.

Trail of Bits highlighted that Sleepy Pickle enables threat actors to maintain stealthy access to ML systems, making detection challenging since the model is compromised upon loading the pickle file within the Python process.

Trending: Offensive Security Tool: PingRAT

Are u a security researcher? Or a company that writes articles about Cyber Security, Offensive Security (related to information security in general) that match with our specific audience and is worth sharing? If you want to express your idea in an article contact us here for a quote: [email protected]

Source: thehackernews.com